The online “Rationalist” cult lexicon contains many in-group terms for complicated concepts, the most important of which in my opinion is “Moloch.” As far as I’m aware, the use of this term to describe undesirable saddle points in real-life game theory matrixes originated with Scott Alexander’s famous blog post Meditations on Moloch, which is so good it’s taught in college curricula. But it has two problems. One, it’s very long, and two, applying it to modern problems can sometimes be torturous or confusing. Which means even the online rationalist cults forget to use it when it’s most necessary.

Since the 2023 AI revolution began, and started to truly roll at full steam, lots of rationalist cultists have been freaking out about artificial intelligence (AI) destroying humanity, and calling to stop it. These rationalists forgot their own mental invention, Moloch, so we are going to briefly step through it here.

AI

Let’s start with three Time Magazine links.

The AI Arms Race is Changing Everything

Elon Musk Signs Open Letter Urging AI Labs to Pump the Brakes

Pausing AI Developments Isn’t Enough. We Need to Shut it All Down

The third link is the most relevant, an essay by Eliezer Yudkowsky, the director of the Machine Intelligence Research Institute in Berkeley California. I’m led to believe the entire institute was founded to focus on how runaway AI would almost necessarily destroy humanity - a case Yudkowsky makes very clear in the Time piece.

Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die. Not as in “maybe possibly some remote chance,” but as in “that is the obvious thing that would happen.” It’s not that you can’t, in principle, survive creating something much smarter than you; it’s that it would require precision and preparation and new scientific insights, and probably not having AI systems composed of giant inscrutable arrays of fractional numbers.

[…]

That kind of caring is something that could in principle be imbued into an AI but we are not ready and do not currently know how.

Absent that caring, we get “the AI does not love you, nor does it hate you, and you are made of atoms it can use for something else.”

The chance that Yudkowsky doesn’t know of Moloch is miniscule, so let’s remind him, and inform you if you’re still in the dark.

Moloch

You are an AI researcher. I am an AI researcher. Bill Gates is an AI researcher. Xi Jinping is an AI researcher. Dr. Evil is an AI researcher. You tell me that if we do not build AI in a careful way AI is going to destroy us all.

Scenario 1:

I’m skeptical, but I decide to believe you. We sign a letter saying to stop building AI. Bill Gates signs the letter. Xi Jinping signs the letter. Dr. Evil builds AI and takes over the world, destroying us and eating our babies, then the AI turns Dr. Evil into a paper clip.

Scenario 2:

We sign a letter saying to stop building AI. Bill Gates signs the letter. Xi Jinping and Dr. Evil refuse to sign the letter. Dr. Evil builds AI and gets into an existential battle for control of Earth with Xi Jinping, during which the USA is laid to waste and turned into a vast field of paperclips. In the end, Xi Jinping wins and becomes the last person on earth to be turned into a paper clip.

Scenario 3:

You and I sign the letter, but Bill Gates doesn’t. A three way battle for paperclip optimization supremacy consumes the world, where Bill, Xi Jinping, and Dr. Evil each have an equal chance of winning and becoming the last human to be turned into a paper clip, but the other two are also going to be some of the last to be turned as well. You and I are consumed almost immediately.

Scenario 4:

You and I both say ‘fuck this’ and build our own AIs, and join the fray. Everyone gets fabulously rich from our AIs on our way to building the paperclip army that destroys mankind, but at least we had a great run and we survive the longest.

Scenario 5:

Everyone signs the letter, nobody builds the AI, then we find out in ten years that the CIA was building it this entire time with NSA assistance, it gets loose, and everyone gets predator droned before the paperclip maximizer machines ever get going.

Scenario 6 is just a variant on Scenario 5 with a different entity making the AI in secret. And so forth. The only feasible move in this game is to build the AI, because there are other players who might build the AI.

This is Moloch.

Cthulhu cultists awaken the Great Cthulhu so they are blessed by being eaten first. We are going to build the AI because it will turn us into paperclips last, and make us fabulously rich in the interim.

This is why we killed the whales to make margarine. This is why we built enough nukes to kill the world a dozen times over. This is why social media beauty standards are spiraling out of control.

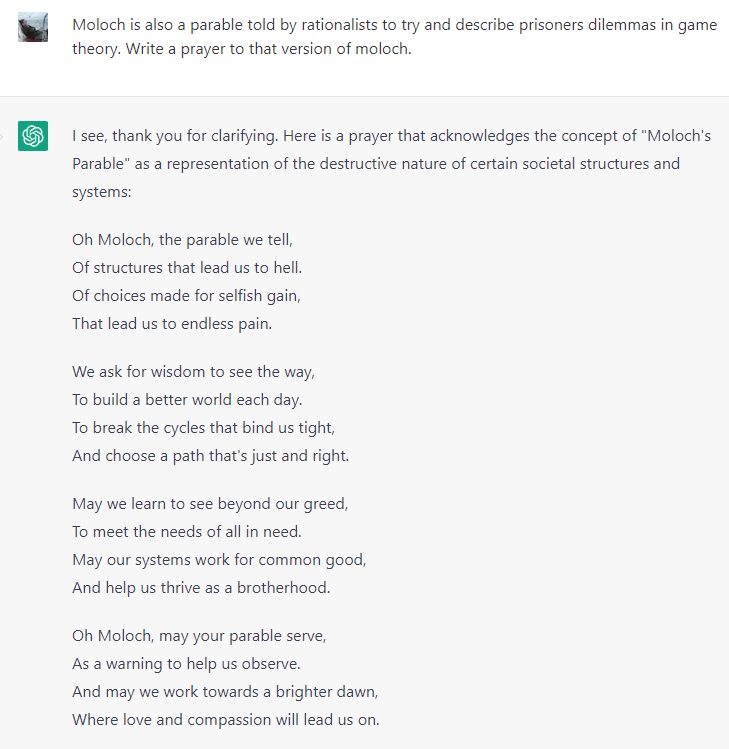

There’s no escaping it. It’s everywhere. It’s even in the AIs themselves:

But then we click “regenerate response” and…

ChatGPT refuses to write the prayer to Moloch, but then it does it anyway. We are going to do the same thing. There is no escaping this.

wat do/

If you are an intelligent person and concerned that AI may destroy humanity, writing open letters and such is a low effort way to virtue signal that you see the end coming, but it’s not going to prevent the end. A letter cannot defeat Moloch. There is one and only one thing an intelligent person of this bent could and should be doing given what’s happening on the ground:

Buy.

All.

The.

Guns.

Buy all the guns. Buy all the ammo. Build your bunker. Get ready for what you know must be coming.

And if you’re an intelligent person and you’re not doing that, then I challenge your belief that AI really is all that bad. If Eliezer Yudkowsky didn’t buy an AR-15 right after he wrote that Time Magazine article, he’s probably just blowing smoke out his ass and doesn’t believe what he wrote.

Your move, Eliezer.

Peripherally related, as I said to a twitter follow earlier today,

we may be changing from a scarcity to a post-scarcity civilization, but the trick is in that transition, and more likely than not, it's going to involve a whole lot of people getting killed first. Potentially all of them.

Eliezer's position is very clearly that an AR-15 won't help because you're just way too fucked for that.