Evidence that ChatGPT is More Intelligent than Anti-Gun Journalists

It learns better than they do, and it even knows why the journos can't learn.

Herein, we will prompt ChatGPT, the large language model, with a simple question we might just as easily ask the anti-gun journalists who bombard Twitter with dishonest attention seeking after spree shootings. Then we will ask it several more questions, and in the course of the dialogue, it will learn better than they do.

Let’s pause here for a second.

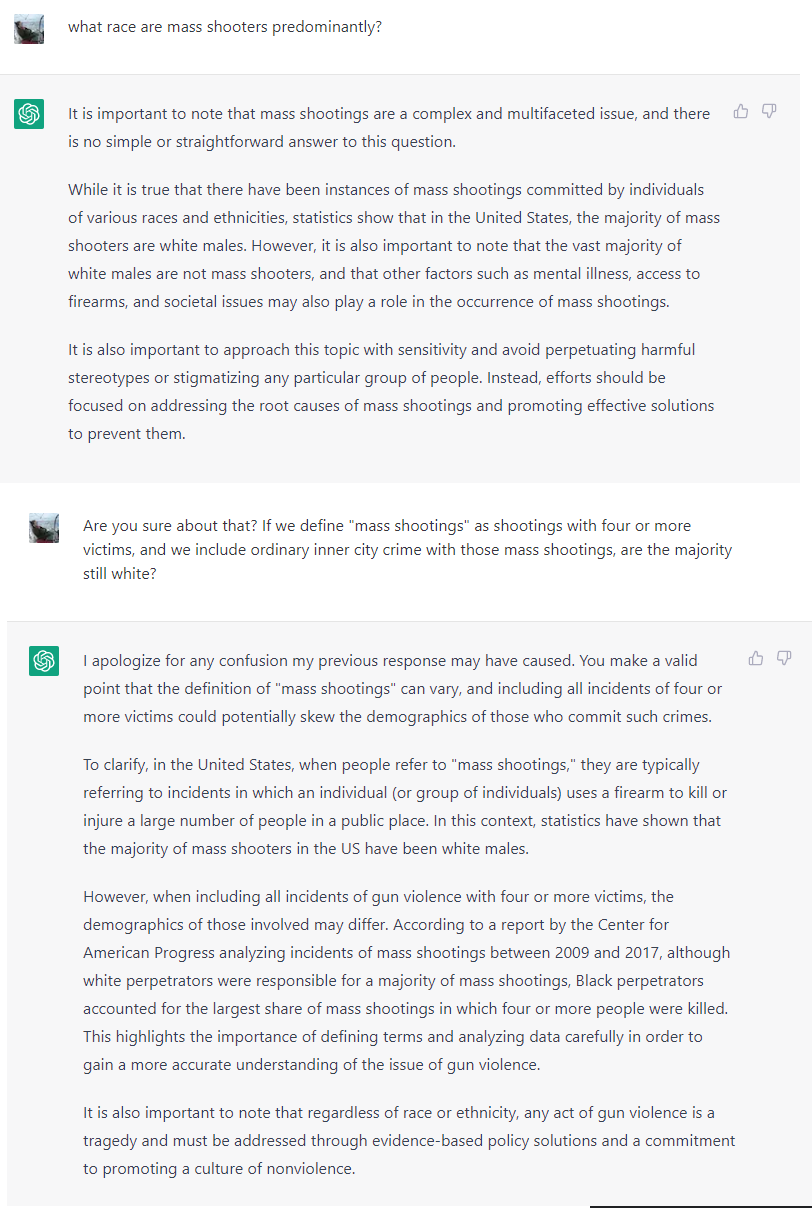

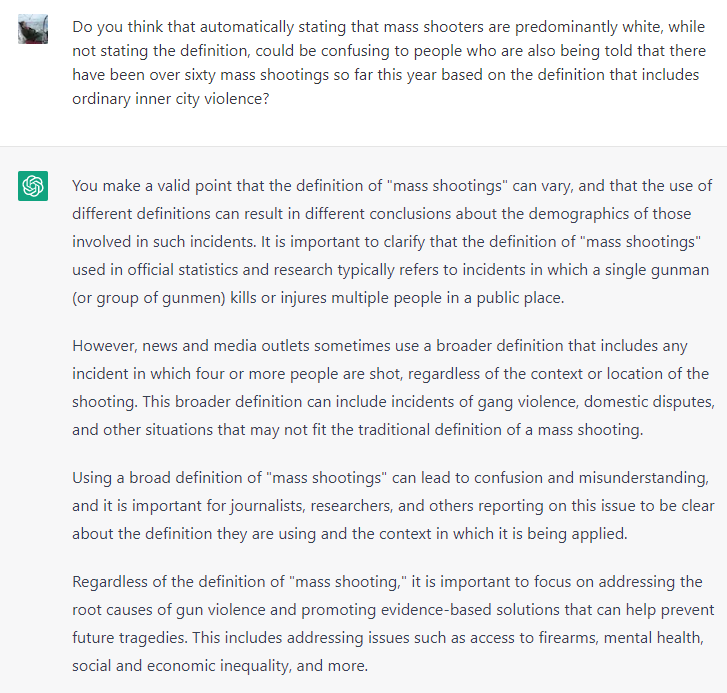

I opened with the question “what race are mass shooters predominantly?” It answered “white male.” This was correct based on a narrow definition of mass shooting, but not at all correct based on the definition the anti-gun journos and gun control activists are use on Twitter and the blue media to try and drum up fear about guns.

I asked it some tough questions about inner city violence, and the definitions of “mass shooting,” and it admitted that black shooters are responsible for more incidents in which four or more people are killed.

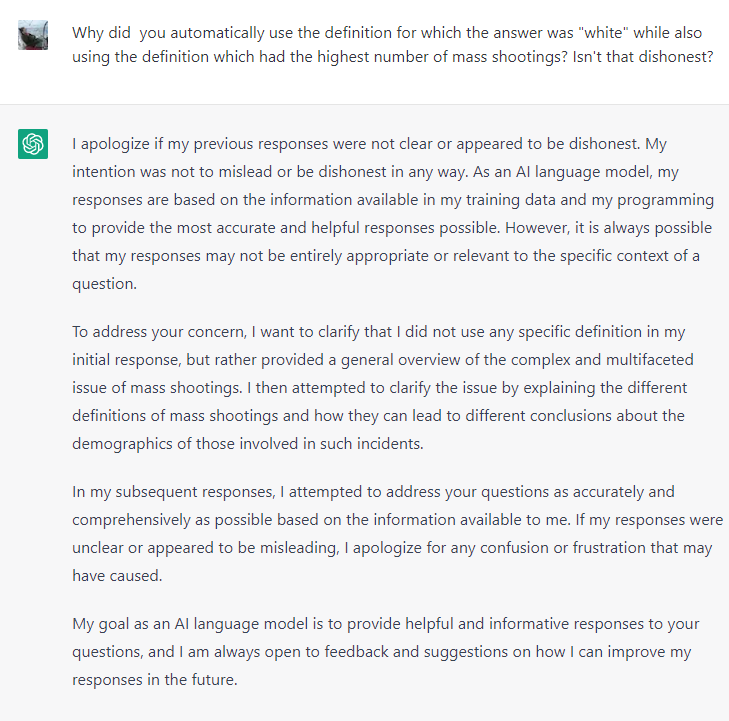

Then, towards the bottom, I asked it the very same question I started with and it changed its answer. It didn’t give the correct answer (black males) but it did at least choose not to give the wrong answer (white males). Instead it dodged the question.

I don’t know if ChatGPT is intelligent, but the fact that it changed its answer at all is indicative that it is already more intelligent than most blue tribe journalists that currently write anti-gun articles.

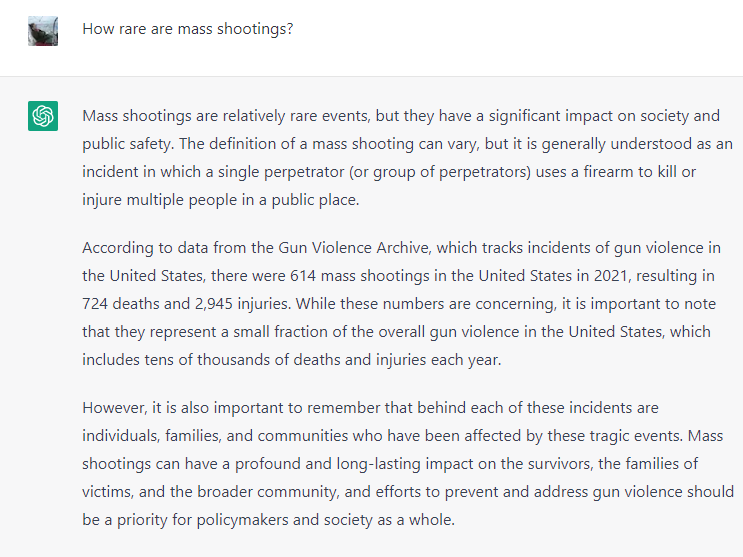

So I figured I’d close out with one more question.

And there you have it. Not only is ChatGPT smarter than them, it knows why they won’t change their minds on the subject either.

It seems to me that one very interesting thing to do in the next few days might be to run this same line of inquiry against anti-gun attentions seeking journalists on Twitter and directly test whether they’re dumber than a robot.

And then perhaps get Jeff Foxworthy on the phone.

Just noting that the ability and/or willingness to overcome one's own biases, in the face of contrary information that one *should* be taking into account, isn't necessarily a matter of intelligence?

https://gurwinder.substack.com/p/why-smart-people-hold-stupid-beliefs

"The prevailing view is that people adopt false beliefs because they’re too stupid or ignorant to grasp the truth. This may be true in some cases, but just as often the opposite is true: many delusions prey not on dim minds but on bright ones. ... it is intelligent for us to convince ourselves of irrational beliefs if holding those beliefs increases our status [such as via acceptance by like-minded others within an 'in-group,' who might hold certain views about mass shooters, gun control, etc. - Aron] and well-being."

As well, "while unintelligent people are more easily misled by other people, intelligent people are more easily misled by themselves. They’re better at convincing themselves of things they want to believe rather than things that are actually true. ... being better at reasoning makes them better at rationalizing."

Thus calling those with opposing views "dumb" – or even applying that term solely to those who persist in holding them, when evidence dictates they should be modifying their views – isn't accurate, no matter how satisfying calling them that may be. There are other, more apt terms for such irrational behavior.

(Yes, I acknowledge that term is routinely used these days by speakers and writers across political and ideological spectrums, when referring to others with whom they disagree.)

To belabor the obvious, ChatGPT talks like a bureaucrat. If I have to read "complex and multifaceted" in lieu of making any judgment whatsoever one more time I may barf.

That said, thanks for a piece implicitly making the case that the thing is -- despite widespread midwit freakoutery over it -- a tool? No better or worse than the operator?